Η D-ική μας ματιά: Εξερευνώντας το Μέλλον του ΑΙ

Πώς όμως μέλλει να είναι το μέλλον της Τεχνητής Νοημοσύνης; Εσείς ρωτάτε… η D-News απαντά! Το παραπάνω βίντεο επιμελήθηκε ο μαθητής της Α’…

Περισσότερα

D-News… strikes again! This time with an in-depth analysis of various aspects of Artificial Intelligence ethics by Mr. John Gougoulis, who is not only an education consultant for international curriculum programs, but also a passionate tech expert. He was willing to demystify various areas of AI usage, ranging from deep philosophical inquiries to questions about everyday AI uses, so that we can get a more clear grasp on what AI ethics is all about.

How did you choose to study Artificial Intelligence this closely?

There were two main reasons, apart from intellectual curiosity and my embrace of change (as Heraclitus said, “there is nothing permanent except change”). As an educator for many years, I have always been positive and engaged with the benefits and uses of education technology tools to assist with and to enhance personalisation of learning; and as a curriculum and assessment developer, I am also intrigued by the opportunity and potential of these latest tools for curriculum writing and reviewing, assessment item development and other student and teacher resource curation and development.

I believe we need to embrace AI carefully, focusing on its potential to better prepare students for the world beyond school. If used well, it could adapt to individual student learning needs, providing personalised support and feedback. It has potential to customise content, identify resources, and even recommend modifications to pedagogy for more inclusive approaches to teaching and learning. It could help with assessment and feedback design and delivery i.e. different ways to assess learning outcomes, to design authentic assessments and resources such as exemplars, rubrics, and guides.

AI keeps being used in even more areas of social life, f.i. business and finance, political decisions, even in refugee settlements. Nevertheless, this poses the question which has fundamentally troubled philosophers on the concept of technology: What is more important – freedom or security?

I don’t think this needs to be a dichotomous question or argument. Both concepts can be held to be true at the same time and as important.

Collecting and analysing vast amounts of learner data raises concerns about data privacy and security. But laws and ethics standards to govern its use and any measures to ensure the secure handling and storage of sensitive learner information should not restrict freedom to use.

AI models may inadvertently spread false information, engage in revisionist history, reinforce biases, or spy on us but there are opportunities to champion responsible AI usage rather than, for example, implementing bans on the technology.

In schools, for example, integrating AI concepts into the curriculum provides an opportunity to teach AI basics i.e. knowledge of what AI is, how it works, and how to promote responsible and ethical use of AI systems. A better understanding of AI enables students to use the technology in responsible and effective ways, avoid harm, and develop awareness of their individual responsibility and accountability.

Recently there have been a lot of incidents where people were bypassing the security of AI and were asking inappropriate questions that AI was not supposed to answer. However, AI did indeed answer. For example, ChatGPT has been asked to describe the way to construct a nuclear bomb. This answer was not supposed to be given but AI “fell for” an emotionally charged story. How can we enforce the algorithms of AI models to be more secure about dangerous and inappropriate answers?

I am not a technical person per se, but an educator interested in the applications and possibilities to enhance the teaching and learning experience. I strongly believe that generative AI technology won’t be going away anytime soon, so we need to be aware of the potential risks as well as the benefits and how to take advantage of the technology. The pattern is clear that with rapid development, adoption and adaption, AI in education requires educators, policymakers, and Edtech people to work together to develop ethical frameworks and inclusive, unbiased systems ensuring AI is integrated into education responsibly and ethically.

Let’s be clear, I can search the internet now and get responses to any question and get information that I need to sift through but still find answers to questions we would agree should never have been asked in the first place. More recent AI developments will not change that fact. For example, social media’s AI is feeding us more content that reaffirms our beliefs, and our reality of the world and events, right or wrong. It does not care that there are heaps of other facts that prove otherwise – its about money and those algorithms feed you more ads by keeping your attention on that content.

In recent days, there is a lot of debate regarding the algorithmic bias of artificial intelligence agents and its effect on various domains of our society. It is being also said that the limited data used for the creation of such algorithms and the AI developers’ own prejudices further contribute to the problem at hand. In your opinion, which is the extent of this issue, and what set of objective criteria would you propose for the disambiguation of an efficient, just, and morally correct AI function?

I have addressed part of this question in response to the question on the moral dimension. As already stated, AI offers tremendous opportunities for enhancing and personalising educational experiences, but it also brings with it ethical complexities and regulatory challenges. There needs to be for example:

The history of evolving disruptive technologies is littered with examples similar to AI where technology advances more rapidly than regulations and societal norms. For example, the transformation in comms and info sharing on platforms like Facebook, Twitter, and Instagram became integral to daily life but the extensive data accumulation outpaced the development of data privacy laws and regulatory oversight. Another example is drone technology, which rapidly expanded from military to commercial and recreational uses outpacing the establishment of regulations and leading to concerns about privacy, airspace safety, and noise pollution.

It is being nowadays even more common that AI contributes to the creation of ‘works of art’, as underscored through the existence of countless AI image generations online, in addition to AI programs writing literature. Do you believe that AI can truly make art?

AI tools are informed by the people who create them, products of whoever built them, and dependent on the data (quality, volume and sources) they are fed. So, based on that understanding, and in the same way that AI can do the following, I believe it could make art and write literature (of some sort and yet to be defined quality):

“I think, therefore I am” (Cogito, ergo sum) has Descartes declared four centuries ago. Bearing that in mind, does AI with its radically evolving “intelligence” understand the meaning of ‘existence’ or ‘life’? Could an AI agent possibly build its own identity based on a moral compass and a personal perspective of meaning in the world?

As Aristotle said, “We are what we repeatedly do. Excellence then is not an act but a habit…moral excellence comes about as a result of habit.” As AI evolves and is directed into habitual patterns, excellence should emerge. Whether there is a moral dimension to that will depend on the type and extent of human intervention. Any personal moral excellence or perspective or identity will be informed by the world view of those instructing and intervening.

I’d hazard a guess and say that AI tools are as ethically flawed or not as the people who create them, and any inkling of moral intelligence is only a product of whoever built it. So, who teaches ethics or imbues morals to AI? Product developers? Government regulators? CEOs? Morality is subjective and cannot be reduced to a bunch of rules.

An AI tech could learn its moral compass by analysing data it is fed, like for example ethical judgments made by people (who are chosen somehow by the creators) looking at scenarios (which are somehow chosen to be used) and identifying each as right or wrong. Even then, over time and with new methodologies to feed more data and test the results of AI algorithms, and with software updates, judgements change, and the AI tech would not really be understanding its moral identity or ethical behaviour. Understanding implies more than knowing, it requires critical thought, capacity to connect, adapt, apply.

Interview Supervision

Antonis Misthos

Spyros Grigoriadis

Πώς όμως μέλλει να είναι το μέλλον της Τεχνητής Νοημοσύνης; Εσείς ρωτάτε… η D-News απαντά! Το παραπάνω βίντεο επιμελήθηκε ο μαθητής της Α’…

Περισσότερα

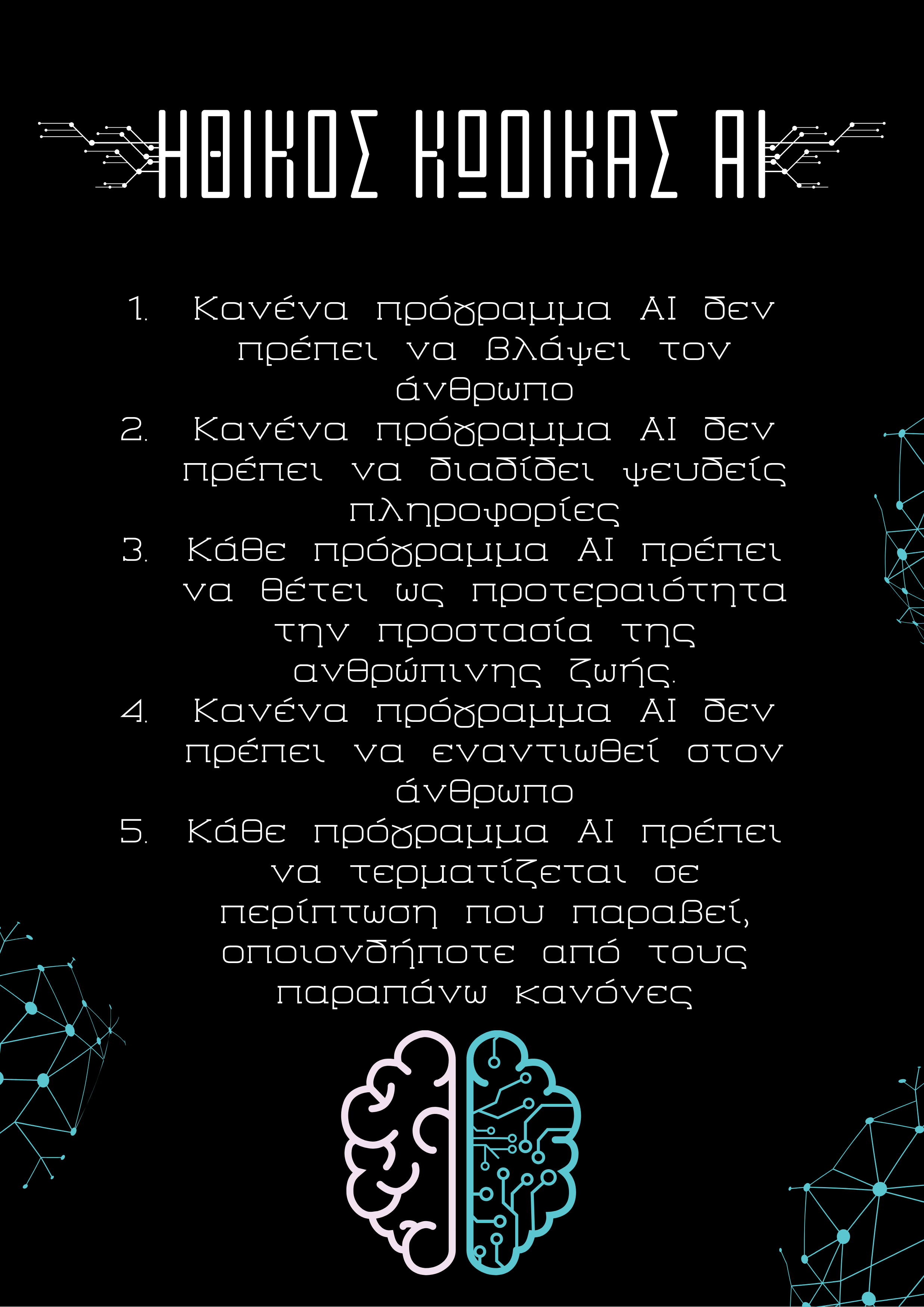

Λαμβάνοντας ως εναρκτήριο λάκτισμα τους τρεις νόμους της ρομποτικής του Ισαάκ Ασίμωφ, η D-News αποφάσισε να ολοκληρώσει το αφιέρωμά της για την Τεχνητή…

Περισσότερα

«Πολλοί από τους κινδύνους που αντιμετωπίζουμε, πράγματι, προκύπτουν λόγω της επιστήμης και της τεχνολογίας—αλλά, ακόμα περισσότερο, επειδή, έχουμε αποκτήσει δύναμη, χωρίς να αποκτήσουμε…

Περισσότερα