Η D-ική μας ματιά: Εξερευνώντας το Μέλλον του ΑΙ

Πώς όμως μέλλει να είναι το μέλλον της Τεχνητής Νοημοσύνης; Εσείς ρωτάτε… η D-News απαντά! Το παραπάνω βίντεο επιμελήθηκε ο μαθητής της Α’…

Περισσότερα

Entropy is a fundamental concept in physics, and it’s intimately tied to the nature of the universe. It’s a measure of the disorder or randomness in a system, and it plays a central role in thermodynamics, statistical mechanics, information theory, and many other branches of science. In essence, entropy tells us about the tendency of systems to evolve from order to chaos.

The Second Law of Thermodynamics, often stated as “the total entropy of an isolated system always increases over time,” is one of the most profound and universal principles in science. It suggests that in any closed system, the overall disorder or entropy is bound to increase, and this has far-reaching implications.

To understand entropy, consider a simple example: a hot cup of coffee left on a table. Initially, the coffee is hot, and the air around it is cool. Over time, heat is transferred from the hot coffee to the cooler air, and the coffee cools down. This cooling process increases the entropy of the system. Why? Because a hot cup of coffee is a state of lower entropy, while a cooler cup of coffee is a state of higher entropy. Nature tends toward the latter; it’s more probable and, therefore, more disordered.

This concept is not limited to coffee cups; it applies to the entire universe. The universe itself is a closed system, and as time progresses, its entropy is constantly increasing. This has led to the idea of the “heat death” of the universe, a scenario in which the universe reaches a state of maximum entropy, and all processes effectively come to a halt. It’s a rather bleak view of the distant future, but it’s grounded in the fundamental laws of thermodynamics.

The significance of entropy isn’t just limited to thermodynamics. It also has implications in information theory. In this context, entropy represents the amount of uncertainty or randomness in a set of data. For instance, a sequence of random letters has high entropy because you can’t predict the next letter. In contrast, a string of letters in a specific pattern has low entropy because you can anticipate the following letter with some accuracy. This notion of entropy in information theory is used in various fields, such as data compression, cryptography, and the study of information content in languages and texts.

Another area where entropy is crucial is in statistical mechanics, which describes the behavior of particles in a system. The distribution of particles in different energy states is related to entropy. The more ways energy can be distributed, the higher the entropy. This has significant applications in understanding the behavior of gases, liquids, and solids and is vital in fields like chemistry and material science.

In essence, entropy is a pervasive concept that helps us make sense of the universe’s tendency toward disorder, the flow of time, and even the transmission of information. It’s a reminder that the arrow of time points from order to chaos, and understanding this concept has profound implications for science and our understanding of the world around us.

So, the next time you see a cup of coffee cooling down, remember that you’re witnessing the inexorable march of entropy, the universal force driving systems from order to disorder, and shaping the world as we know it.

Ανδρέας Μούτσελος

Μαθητής ΙΒ1

Πώς όμως μέλλει να είναι το μέλλον της Τεχνητής Νοημοσύνης; Εσείς ρωτάτε… η D-News απαντά! Το παραπάνω βίντεο επιμελήθηκε ο μαθητής της Α’…

Περισσότερα

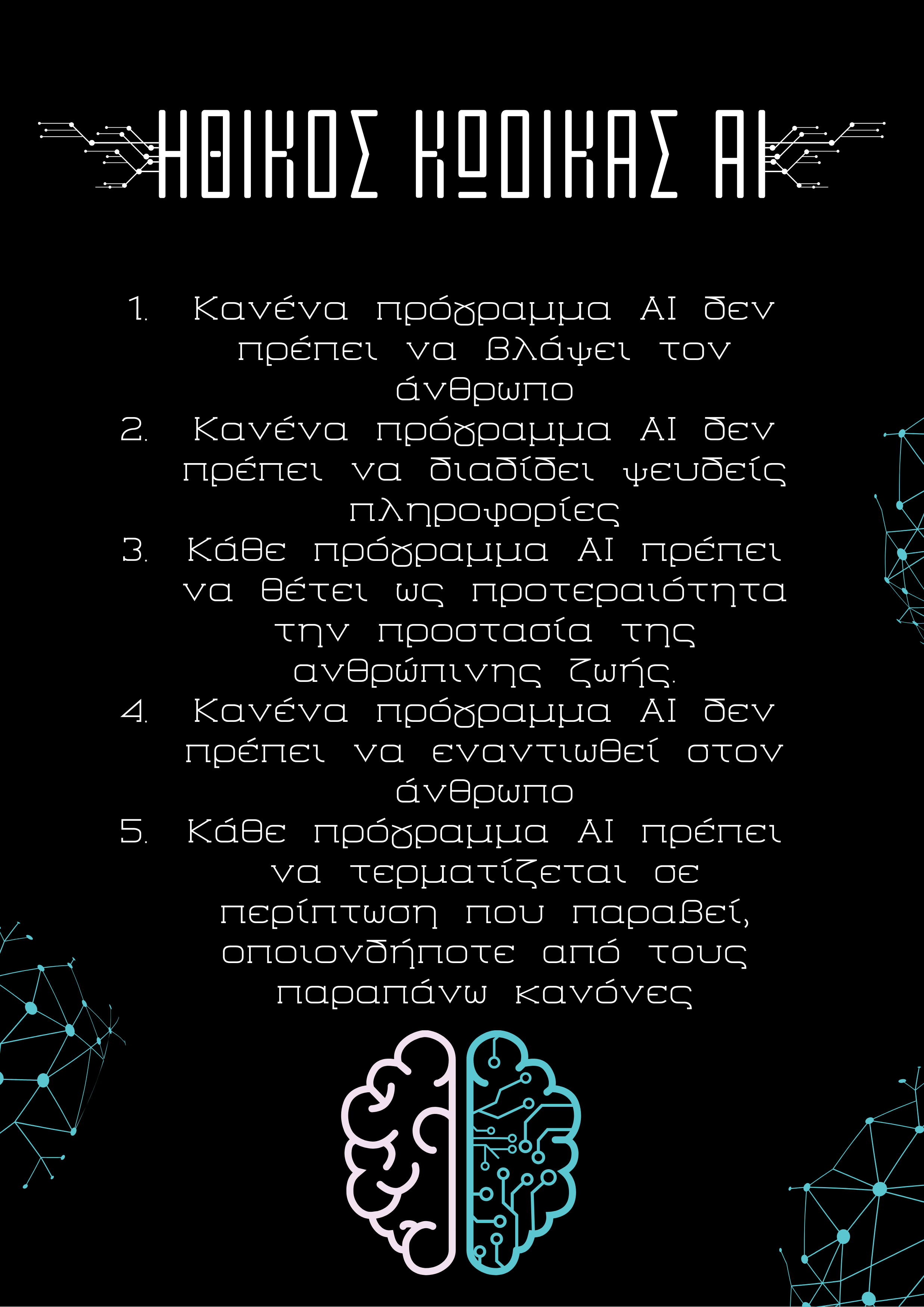

Λαμβάνοντας ως εναρκτήριο λάκτισμα τους τρεις νόμους της ρομποτικής του Ισαάκ Ασίμωφ, η D-News αποφάσισε να ολοκληρώσει το αφιέρωμά της για την Τεχνητή…

Περισσότερα

D-News… strikes again! This time with an in-depth analysis of various aspects of Artificial Intelligence ethics by Mr. John Gougoulis, who is not only…

Περισσότερα